TL;DR: “This document is long; I don’t want to read it. If only there was a way to summarize it…”. How might this happen? Cue Automatic Text Summarization.

Automatic Text Summarization

If you are anything like myself, when given a document, news article, long email or likewise you will immediately look for the summary or the TL;DR. Summaries distil a document down into its essential points only, and generally make it easier and quicker to read written information. Given the ever-rising amounts of content available online, being able to quickly filter the interesting articles from the irrelevant is an increasingly important skill.

Wouldn’t it be great if we could have a computer produce these for us? How would that be possible? Well, I’m glad you asked. This is exactly the remit of Automatic Text Summarization, which aims to do precisely that: have computers produce human-quality summaries of written content.

I am currently undertaking a MSc summer project with T-DAB.AI on this subject and I think it is a super cool and exciting field which I wanted to share. Within text summarization, this project has two main areas of focus: 1) how can we better evaluate our output summaries, as automatically evaluating the quality of summaries is a challenging problem in and of itself, and 2) how can we adapt existing methods to summarize longer documents.

This article is the first of a mini-series on this topic. This article will introduce the topic, and the subsequent two issues will expand upon the two themes mentioned above. And of course, I will flag anything else of interest I stumble across. Continuereeading below if you are interested in hearing more about automatic text summarization and the leading approaches in the field.

Overview of Automatic Text Summarization

People have been trying to get computers to automatically summarize documents for over 60 years, so this is hardly a new problem. Throughout this period, people have tried numerous different methods, namely rules-based systems built upon expert linguistic knowledge, statistical approaches and on machine learning methods. However, over recent years neural networks and deep learning have been applied highly successfully across the wider field of Natural Language Processing (NLP) and these methods have come to dominate the leading approaches to automatic summarization.

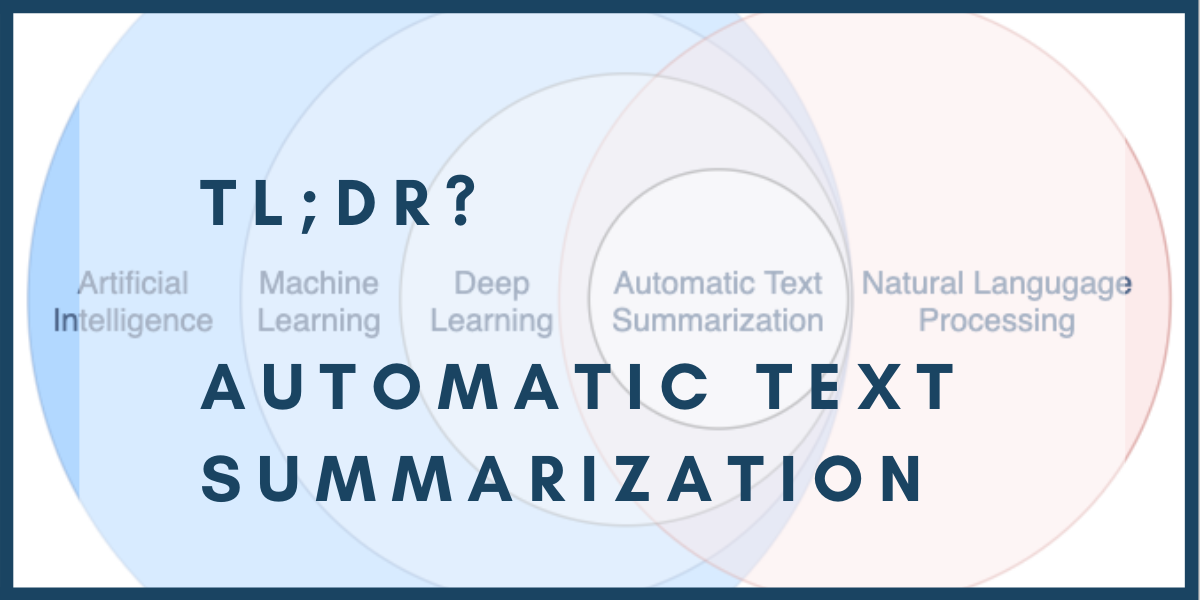

Text summarization currently sits within the intersection of NLP and deep learning as shown in the diagram above. This area often deals with long documents, while most of NLP only require short inputs. This means that automatic text summarisation sits on the frontier of NLP research and lots of innovation is happening in this space. New leading studies are published frequently from many of the world’s leading universities and tech companies’ research labs, including Google, Facebook and Microsoft. Indeed, part of the reason I think this area is great is that it is so hot currently! So much so that sometimes it is difficult to keep up with the pace of research.

Leading approaches: high level overview

This section aims to give you an impression of the names and methods used in the current leading text summarization architectures. Parts of this section are more technical, but I leave most of the technical details to the links provided or future blog posts for those interested.

Neural networks were first successfully applied to text summarization using the sequence-to-sequence recurrent neural networks architecture, hereon in seq2seq and RNN. In a nutshell, this approach uses two RNNs: an encoder and a decoder. The job of the encoder is to encode the input sequence into a single, high dimensional vector representation. This is then passed to the decoder; whose job is to produce an output sequence given the encoded input. Hence, for summarization, the input is a news article or document and the decoder tries to make a summary conditioned on the encoded representation of the input. This model is trained in using supervised learning, whereby the model is encouraged to replicate a human-produced summary for each article and is trained using the standard maximum likelihood procedure. This seq2seq method served as the foundation for using RNNs for summarization and was extended by numerous studies; if you’re a curios reader, check out this paper: Summarization with Pointer-Generator Networks as it is perhaps the best-known example (and is a nicely written and explained paper).

RNNS vs Transformers

The first generation of neural text summarization models were comprised of RNNs. However, RNNs are notoriously slow to train and they cannot be easily parallelized. Cue the Transformer. These were developed with the explicit goal of being easily parallelized and therefore able to benefit from training with massive amounts of compute on enormous datasets. In a nutshell, Transformers manage this by discarding the “recurrent” computations through the hidden layers central to RNNs and replacing this with self-attention layers, which allow the network to relate each input word with all other input words. A detailed overview of Transformers is beyond the scope of this article, but the curious reader can find plenty of great resources available online, including the original paper or The Illustrated Transformer blog post.

Across many tasks in NLP, Transformers have replaced RNNs as the model of choice and this is also the case in summarization. As training Transformers is extremely compute-expensive, transfer learning is frequently used in this area (many pre-trained models are available online, see the excellent Huggingface library for example). The current state of the art approaches to summarization use Transformers trained using a pre-training objective tailored to summarization/natural language generation tasks. These notably include BART, PEGASUS and ProphetNet. However, while these models work well for short documents (less than 1,000 words), they are highly memory-inefficient for longer documents and so cannot be run as-is with long inputs on existing hardware. An exciting research area (which I am focusing on during my project) is how to adapt these models so they perform well on longer documents. This is an rapidly developing space so keep your eyes peeled for more developments!

Concluding Thoughts

Well done for those of you who managed to make it to the end and thanks for reading! In this article I gave an outline of the field of automatic text summarization and touched upon the leading approaches in this area. I plan on following this post up with a couple more over the next few weeks and months as the project develops. As mentioned, the next two issues will be on evaluation and long summarization methods (including my research findings in these areas). After that, I am not completely sure yet, but I am certainly open to suggestions. If you have any comments/thoughts/feedback or just want to connect, please reach out!